The anatomy of plant design and the OT cyber-attack – Part 1

In my previous article, I discussed the OT cyber security skills gap, in this article, I will continue the discussion on cyber security skills by discussing in more detail how manufacturing plants are designed and where these OT cyber security skills play a role. For this, I made several drawings to create an overview of the engineering process (Part 1) and discuss the anatomy of an OT cyber-attack against a production process (Part 2).

For me OT cyber security requires a holistic and risk-based approach to succeed in creating a cyber-resilient plant. I discuss this on the basis of the design process for building a greenfield petrochemical plant, a new installation. The process will meet the ISA / IEC 62443 requirements, but not necessarily follows the processes suggested in some of the standards. The end result complies but some of the steps to reach that result will differ. Let’s first discuss the main design disciplines active when we build a plant.

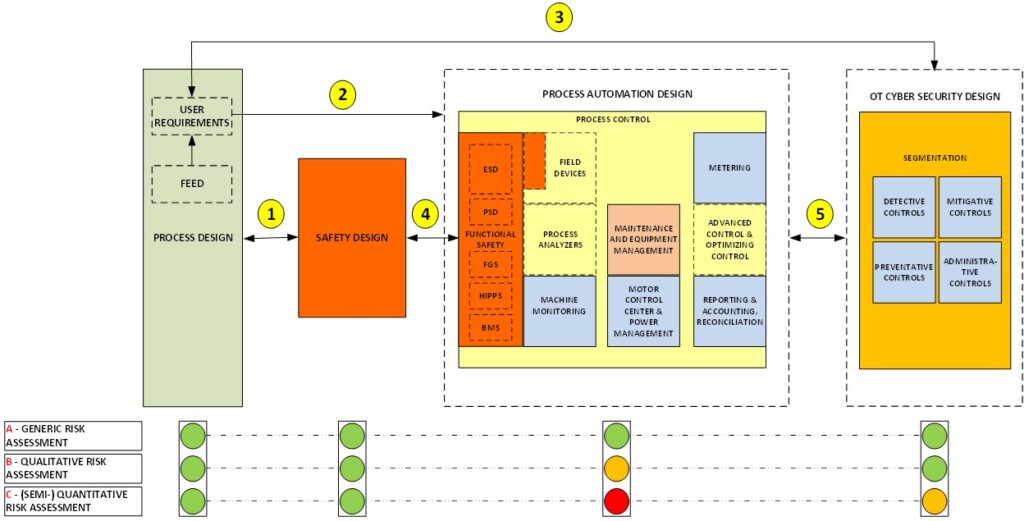

There are 4 design disciplines: process, safety, automation, and security design. The diagram shows some arrows that represent the interactions/dependencies of importance for OT security between these disciplines. A design process can be divided into four phases, which do not necessarily occur in series. Some phases are iterative and can be performed in parallel, others are staggered over time. The sequence of activities depends in part on when the information to perform the activity becomes available. The four design phases are:

1. Conceptual process design – The design begins with this phase. In this first step, the feasibility of the project is studied, together with the estimated costs and the regulations to be complied with. OT security is not directly involved in this step, other than potentially considering a preliminary OT security budget. Design and operations in the process industry must comply with many regulations, codes and industry standards. Some of these regulations, for example, the regulations for societal risk and individual risk, also influence the design of OT security, some regulations influence the design of safety and process automation. Regulations on boiler design, safety design in general, individual risk, and societal risk, for example, are related to the permission to operate the installation and as such influence the OT security protection. But OT security is not a design task at this stage.

2. Basic engineering design – In this phase, the plant layout, material and energy balances, storage requirements, material transfers, risk management workflows, and the potential incidents are considered. Risk assessments are carried out, taking into account: building placement, the storage size of hazardous materials, and knock-on effects between units and storage facilities/tanks/spheres to contain the impact of potential accidents.

If toxic and explosive vapors can result from a loss of containment, it is important to analyze the vapors’ cloud dispersion in combination with the individual workers and potential public exposure. See as an example this study of Shell Global Solutions. Such process design information is important for safety design when categorizing the effects of process safety scenarios in the FEED phase. Because process security scenarios no longer only apply to accidental failures, but should now include scenarios where both terrorist acts involving physical attacks (e.g. France 2017) and acts by cyber-attackers (e.g. Saudi Arabia 2017) are considered.

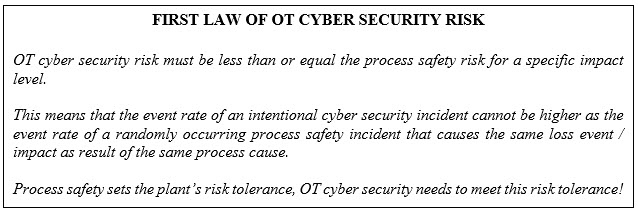

The estimated event frequencies (probability) for these scenarios and the regulations setting maximum incident frequencies are now also important for OT security design. In peacetime, regulations do not look at the cause of an industrial incident, but mainly at the consequences (deaths, irreversible injuries, environmental damage) of the incident. As long as cyberattacks are not classified as an act of cyber warfare carried out by a nation-state, the process safety failure criteria also apply to intentional failures caused by failing OT security.

3. FEED – Front End Engineering Design. This is the phase where the requirements for the process automation function become available, and with this, the OT security requirements need to be defined together with an initial design. The supplier of the automation solutions might not be selected, but at a functional level the process automation system can be designed and security requirements defined.

Process flowsheets are developed, and Piping and Instrument Drawings (P&ID) are prepared; these are both of importance for performing an initial OT security risk assessment. The FEED uses the initial OT security risk assessment to specify preliminary security requirements to be included in a Request for Proposal (RFP) written for selecting the suppliers of the process automation functions.

At this point in time of the design process, the process safety HAZOP (Hazard and Operability study) and LOPA (Layers Of Protection Analysis) information are not yet available, therefore performing a detailed risk assessment is not yet possible. The initial risk assessment typically uses a generic risk assessment method (based upon checklists often linked to the plant’s security policies) but sometimes a qualitative risk assessment method is used if such a policy hasn’t been defined yet.

The strength of the detailed risk assessment is that it verifies if the target mitigated event likelihood (TMEL), specified by the process safety design team for the various impact categories, is met by the level of cyber resilience of the initial OT security design.

4. Detail engineering design. At this point in the plant design process, most information becomes available. Vendors and the main automation contractor for the various automation functions have been selected, HAZOP and LOPA have been completed, and the process automation function data flowsheets, network topology, and zone/conduit/channel diagrams are created.

Now that this data is available, now is the time to conduct a detailed risk assessment. In a greenfield environment, this will normally be a quantitative risk assessment linking cyber-attack scenarios with process safety loss scenarios to verify that the initial security design meets the TMEL risk criteria. If not, adjustments need to be made.

Qualitative risk assessment methods cannot be used for justification purposes, these methods can identify and rank risk but have no objective base for likelihood. As such no link to the target mitigated event likelihood criteria of process safety is possible. This does not seem to be a problem in countries where there are no clear regulations for individual risk and societal risk (For example the US, see report), but it is an issue in regions such as Europe and the Middle East where such criteria do exist.

Process safety design provides us with the process causes of the various possible process deviations. They also define the worst-case consequences for these process causes, and they define which safeguards are specified to reduce the risk from a random equipment failure perspective. The OT security task is to identify the cyber-attack scenarios that either cause the process anomaly in combination with disabling/modifying the safeguard to prevent the consequence from occurring, or abuse the safeguard to create the process anomaly. The Trisis/Triton attack showed that such scenarios are possible, threat actors associated with Russia showed the intent to do so (Saudi-Arabia 2017) and acquired the capabilities needed to carry out such an attack.

The detailed risk assessment provides a failure rate (no longer for random, but now for intentional causes) for the cyber-attack scenarios. Considering both the security measures and topology of the original design. For each impact category, we can check whether the results meet the set event frequency limits, if not, the security design must be adapted for this attack scenario so it does.

For example, if the LOPA process takes credit for a SIL 3 safeguard, and this safeguard is a programmable/electronic safeguard so potentially vulnerable to a cyber-attack, such as a safety instrumented function (SIF) depending on a SIL 3 safety controller and field equipment, we need to make sure that the likelihood (event rate) of a successful attack on the safety controller and its associated field devices will remain within the limits of once every thousand years (1E-03) for which the SIL 3 SIF took credit. If this is not the case, we might violate the criteria for individual and societal risk specified by the regulator if the consequence of the incident would be a fatality. At minimum OT security risk now will be bigger than the safety risk, which is not allowed.

This brings me to the first law of OT cyber security risk and with that a requirement for the cyber security resilience of the installation.

If we look at Figure 1 in more detail, than the information exchange #1 represents the information exchange between the process safety engineers responsible for the safety design and the process design engineers responsible for the design of the overall installation.

A design process that includes all considerations for both process safety (which includes functional safety, the part realized by the safety instrumented system, the part that can become a target for a cyber-attack) as well as personal safety. Process safety is taken into account from the very first steps in plant design. This includes considerations limiting the impact of incidents by reducing the amount of hazardous materials in the production process and storage (intensification), or where possible using safer materials (substitution), or designing a more intrinsic safe production process (attenuation). Reducing process safety risk by reducing the impact, also reduces OT cyber security risk and the requirements for OT cyber security. So indirectly OT cyber security always benefits from the process safety design because it needs to obey the 1st law of OT cyber security risk.

Information exchange #2 includes the results of the initial risk assessment that set the security design requirements. These design requirements should be specific and verifiable when we start building the system. A requirement such as: “The design should comply with ISA / IEC 62443 Security Level 3” is not specific enough for a design. First, IEC 62443 is an industry-independent standard and therefore cannot be too specific, resulting in a level of cybersecurity driven by competitive pressures rather than risk criteria. In addition, the standard is not very specific about how the standard should be implemented in a real system, a designer has many options that result in different levels of resiliency. There is no clear risk tolerance level available to drive the security design.

An example to illustrate this is: “ Do we really want an operator station in the central control room (CCR) to have the same security settings as an operator station in a local equipment room (LER)?”

If we say yes to the question, this means we need to activate screen savers enforcing logins when a session times out for the 24×7 occupied CCR operator stations. In an LER we don’t want an operator station that can be accessed by non-authorized personnel. So different security zones, but most likely the same security level, never the less different security measures. In a CCR the process operator will prevent nonauthorized personnel to access the station, in the LER we need a security measure for the same.

Security Levels don’t address these details, a repository of attack scenarios and their possible security measures analyzed by a risk assessment specify the need for security measures and risk consequences at a more detailed level.

Information exchange #3 represents the OT security activity during the FEED for carrying out the initial risk assessment. For the initial design of security zones and conduits as ISA / IEC 62443 suggests, it is too early. In many cases not all suppliers of the process automation systems have been selected during FEED and automation system architecture differs very much between different vendors influencing the zone and conduit design. The vendor selection impacts the network topology, protocol use, overall interconnectivity, and dependency between the process automation functions.

Once we enter the detailed security design the HAZOP / LOPA results from the safety design, which define the safeguards required to meet the process safety criteria, are available. These safeguards include both the physical safeguards and the functional safety safeguards to be implemented by the SIS. Safeguards such as the preventative safety instrumented functions for shutdown actions (ESD) or the mitigative controls associated with fire and gas detection (FGS). Apart from this, there are other safety-related functions, such as Process Shut Down functions (PSD) to shut down production process under normal circumstances, HIPPS (High Integrity Pressure Protection System) preventing over-pressurization of the plant, and the BMS (Burner Management System) controlling the ignition process industrial furnaces and boilers. But also a process alarm combined with an operator action or an interlock can be seen as safeguards.

The information exchange #4 represents the specification for the functional safety solution in the process automation system. For example, the various safety instrumented functions (SIF) that need to be configured/programmed; which (and how many) inputs and outputs are required; which trip points need to be set; and what possible overrides are required in support of maintenance activities. This information is important for OT cyber security, as I will explain when discussing the OT security attack anomaly in PART 2 of this article.

The last information exchange is #5, this arrow represents all the data required for the security design. This information exchange is a bi-directional process because the security design might lead to different choices in the process automation design, and reverse.

Process operations have many different tasks to do to operate a plant. This requires a multitude of applications from different vendors demanding a secure integration of these functions. Some functions are not allowed to have inter-dependencies, for example, control and process safety functions should be independent of each other. This is because they are both protection layers preventing serious accidents, there shouldn’t be a single point of failure. The LOPA might take credit for both a process operator responding to a process alarm (BPCS function) and a SIF (SIS function).

This independence needs to be maintained by OT cyber security, there shouldn’t be a single point of cyber failure that can be used to conduct a coordinated attack against both the control system (BPCS) and the safety system (SIS). An example can be having an engineering system that hosts both the control system engineering function, as well as the safety system engineering function compromises this independency of the two protection layers because we can attack both functions from a single compromised engineer station.

This ends Part 1, now I would like to zoom into the OT cyber-attack and describe how the anatomy of the cyber-attack links with the above design process and detailed risk assessment. Part 2