Comprehensive Guide to Integrated Operations (Part 3)

Principal Architecture of Data Flow in Operational Technology (OT) Environments

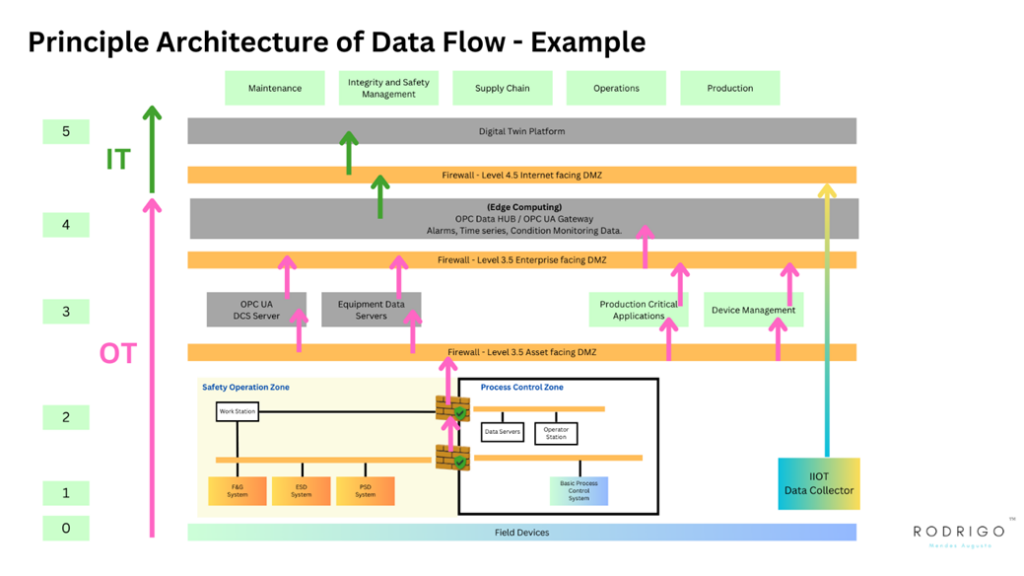

Data flow architecture in Operational Technology (OT) environments is crucial for ensuring efficient, accurate, and secure transfer of information across different levels and components of industrial Systems. This architecture is designed to facilitate the seamless movement of data from the field level, where data is generated, to the enterprise level, where data is used for strategic decision-making. Here, we delve into the principal architecture components and their functionalities within OT environments.

Data Acquisition Layer

This is the foundation of the data flow architecture, where data is initially collected:

Sensors and Actuators: At the field level, sensors collect physical measurements (e.g., temperature, pressure), and actuators perform actions based on control signals. This raw data is essential for real-time monitoring and control functions.

Data Acquisition Systems (DAS): These systems aggregate and digitise data from various field devices, preparing it for further processing and analysis. They ensure that data is accurately captured and transmitted to higher-level systems.

Edge Computing Layer

As data moves up from the acquisition layer, edge computing plays a pivotal role:

Edge Devices include gateways, industrial PCs, and other devices close to data sources. They perform preliminary data processing, reducing latency and bandwidth usage by filtering, normalising, and aggregating data before sending it to centralised systems.

Local Data Storage and Analysis: Edge devices can temporarily store and perform initial analysis on data, enabling quicker responses to local events and reducing the load on central systems.

Communication and Network Infrastructure

The backbone of data flow in OT environments, this infrastructure ensures that data is securely and efficiently transmitted between different layers and locations:

Industrial Networks include wired and wireless networks facilitating data transmission within industrial facilities. Protocols like Ethernet/IP, Profinet, and Modbus ensure reliable and standardised communication.

Data Gateways and Routers: These devices manage data traffic, ensuring that data is securely routed between networks and systems, often incorporating security measures like firewalls and VPNs to protect data integrity and confidentiality.

Data Processing and Control Layer

At this level, data is further processed, analysed, and used for control:

Programmable Logic Controllers (PLCs) and Distributed Control Systems (DCS): These systems use processed data to execute control logic, managing industrial processes based on predefined parameters and real-time data inputs.

Supervisory Control and Data Acquisition (SCADA): SCADA systems compile data from various sources, providing a unified interface for monitoring and controlling industrial processes across different locations and scales.

Information Management and Integration Layer

This layer focuses on transforming processed data into actionable insights and integrating it with business processes:

Manufacturing Execution Systems (MES): These systems manage and document production processes, linking real-time operational data with plant management tasks.

Data Historians: They collect, store, and retrieve historical data, providing a comprehensive record of operational data for analysis, reporting, and decision support.

Enterprise and Analytics Layer

The topmost layer where data is used for strategic analysis and decision-making:

Enterprise Resource Planning (ERP) Systems: These integrate operational data with business processes, facilitating resource planning, financial management, and strategic decision-making.

Business Intelligence (BI) and Advanced Analytics Platforms: Utilising data from various OT and IT sources, these platforms provide insights, trends, and predictive analytics to inform business strategies and operational improvements.

Integration and Security Across Layers

Data Integration: Ensuring seamless integration between layers is essential for consistent, accurate, and timely data flow. This involves standardising data formats, protocols, and interfaces to enable effective communication and exchange across different systems and layers.

Cybersecurity: As data flows through various layers, implementing robust cybersecurity measures at each stage is critical. This includes encryption, authentication, access control, and continuous monitoring to protect against unauthorised access and cyber threats.

The principal architecture of data flow in OT environments is designed to support efficient, secure, and reliable data management across all levels of industrial operations. By understanding and optimising this architecture, organisations can enhance their operational visibility, control, and decision-making capabilities, improving efficiency, safety, and competitiveness.

Additional Requirements for Data Flow and Integration within Unmanned Operations

Uncrewed operations in industrial environments, particularly within Operational Technology (OT), present unique challenges and requirements for data flow and integration. These systems must operate autonomously, often in remote or hazardous locations, necessitating robust, reliable, and secure data communication architectures. Here, we explore the additional requirements for adequate data flow and integration within uncrewed operations.

Enhanced Reliability and Redundancy

In uncrewed operations, system failures or data losses can have significant consequences, making reliability and redundancy critical:

Fault-tolerant Systems: Implementing systems designed to continue operating effectively during a component failure. This includes redundant hardware and software systems that seamlessly take over should a primary system fail.

Data Redundancy: Storing critical data in multiple locations or formats to prevent loss. This includes techniques like RAID (Redundant Array of Independent Disks) for local data storage and cloud-based backup solutions for remote data redundancy.

Autonomous Data Handling and Decision-making

Without on-site personnel, unmanned systems must have enhanced capabilities for autonomous data handling and decision-making:

Advanced Data Processing: Implementing edge computing devices capable of processing and analysing data locally, allowing for real-time decision-making without constant communication with central systems.

Automated Decision Algorithms: Developing sophisticated algorithms that can make operational decisions based on real-time data and predefined parameters, reducing the need for human intervention.

Robust Communication Networks

Uncrewed operations often occur in remote or challenging environments where traditional communication networks may be unreliable:

Satellite and Radio Communications: Utilising satellite or long-range radio communications to ensure continuous data flow even in remote locations.

Mesh Networks: Implementing self-healing mesh network technologies that automatically reroute data if a connection is lost, ensuring continuous communication.

Enhanced Security Measures

The autonomous nature of uncrewed operations, coupled with their often remote locations, makes them particularly vulnerable to cybersecurity threats:

End-to-end Encryption: Ensuring that all data transmitted to and from unmanned systems is encrypted, protecting sensitive information from interception.

Regular Security Updates and Patches: Automating the deployment of security updates and patches to ensure that unmanned systems are protected against the latest cybersecurity threats.

Energy-efficient Data Transmission and Processing

Uncrewed operations must often rely on limited power sources, making energy efficiency a critical consideration:

Data Compression: Implementing data compression techniques to reduce the size of the data being transmitted, minimising energy consumption associated with data transmission.

Energy-efficient Hardware: Utilising low-power hardware components for data processing and communication to extend the operational life of unmanned systems.

Scalability and Flexibility

As uncrewed operations evolve, the systems must be scalable and flexible to accommodate new technologies, operational goals, and environmental conditions:

Modular System Design: Designing systems with modular components that can be easily upgraded or replaced.

Flexible Software Architecture: Developing software with a modular, microservices architecture to allow for easy updates and integration of new functionalities.

Environmental Monitoring and Adaptation

Unmanned systems must be able to operate effectively in a wide range of environmental conditions:

Environmental Sensing: Integrating environmental sensors to monitor conditions such as temperature, humidity, and atmospheric pressure, allowing systems to adapt their operation as needed.

Ruggedised Equipment: Employing ruggedised equipment designed to withstand extreme conditions, ensuring reliable operation in environments that may be corrosive, explosive, or subject to severe temperatures or pressures.

Predictive Maintenance and Self-Diagnostics

To minimise downtime and prevent failures, unmanned systems require advanced maintenance capabilities:

Predictive Maintenance: Utilising data analytics and machine learning to predict equipment failures before they occur, allowing for proactive maintenance.

Self-Diagnostic Capabilities: Implementing self-diagnostic functions that can detect, report, and sometimes rectify operational issues without human intervention.

By addressing these additional requirements, uncrewed operations can achieve high efficiency, safety, and autonomy levels. This ensures that data flow and integration are maintained at optimal levels, supporting these advanced systems’ continuous, reliable operation in various industrial contexts.

Data Historian Functions, Necessary for Digital Twin Strategy, Related to OT Information

Data historians are integral components of industrial operational technology (OT) systems. They are designed to accurately collect, store, and retrieve large volumes of time-stamped production data. This capability is fundamental in digitalisation in industries, serving as the backbone for data analysis, process optimisation, and decision-making processes. In modern industrial settings, the role of data historians extends beyond simple data storage; they facilitate real-time data management and serve as a reliable source for historical data analysis.

Role of Data Historians in Digital Twin Development

The implementation of digital twins, which are dynamic virtual representations of physical systems, relies heavily on historical data from data historians. These virtual models simulate real-world assets, processes, and systems, allowing for detailed analysis and prediction. For a digital twin to function effectively, it must be fed with accurate and comprehensive historical data. This data enables the digital twin to mirror the physical entity’s past states and behaviours, essential for predictive analytics, troubleshooting, and process optimisation.

Critical Functions of Data Historians in Supporting Digital Twin Strategies

Time-Series Data Collection: Data historians collect time-series data from various OT devices and systems. This data is critical for constructing a comprehensive historical context for each component of the digital twin.

High-Fidelity Data Storage and Retrieval: They provide high-fidelity, granular historical data, enabling the digital twin to simulate past conditions accurately. This feature is crucial for analysing performance over time and understanding the impact of different variables on the system.

Data Integration and Synchronization: Effective digital twin strategies require data integration from diverse sources. Data historians facilitate the synchronisation of this data, ensuring that the digital twin reflects a unified and accurate representation of the physical system.

Scalability and Security: As digital twin technologies evolve, data historians must scale accordingly to handle increased data volumes and types. Additionally, ensuring the security of this data is paramount, especially in critical infrastructure sectors where data breaches can have severe consequences.

Analytics and Visualisation Support: Data historians support advanced analytics and visualisation tools, enabling stakeholders to extract actionable insights from historical data. This capability is essential for continuously improving and optimising the digital twin models.

Challenges and Considerations

While data historians are vital for digital twin strategies, there are challenges to consider:

Data Quality and Consistency: Ensuring that the historical data is high quality, consistent, and free from gaps is essential for the accuracy of digital twin simulations.

Interoperability: The ability of data historians to integrate with different systems, platforms, and digital twin solutions is crucial for seamless operations.

Scalability: As the scope of digital twin applications grows, data historians must be able to handle an increasing amount of data without compromising performance.

Cybersecurity: Protecting the data within historians from cyber threats is crucial, especially given the increasing integration of IT and OT environments.

Data historians are pivotal in successfully implementing digital twin strategies in industrial settings. By providing reliable, time-stamped, and comprehensive historical data, the digital twin can function as an accurate and effective virtual counterpart to physical systems. However, to fully leverage the benefits of data historians, industrial entities must address data quality, interoperability, scalability, and security challenges. Addressing these challenges will ensure that digital twin strategies are robust, reliable, and capable of driving significant operational improvements.

Requirements for OT/IT OPC UA Gateway in Integrated Operations

The OPC Unified Architecture (OPC UA) gateway plays a crucial role in bridging Operational Technology (OT) and Information Technology (IT) systems within industrial environments. This integration is essential for achieving comprehensive visibility, real-time data exchange, and enhanced decision-making capabilities. Below, we detail the specific requirements for implementing an effective OT/IT OPC UA gateway.

Security

Security is a paramount concern when integrating OT and IT systems, especially given the increasing frequency and sophistication of cyber threats:

Encryption: The gateway must support strong encryption standards for data in transit, such as TLS/SSL, to protect sensitive information from being intercepted or tampered with during transmission.

Authentication and Authorisation: Robust user authentication and role-based access control mechanisms should be in place to ensure that only authorised personnel can access or modify data.

Audit Trails: Maintaining comprehensive logs of all data exchanges, access attempts, and system changes for monitoring and forensic analysis.

Interoperability

A vital function of the OPC UA gateway is to facilitate seamless communication between diverse systems and devices:

Protocol Conversion: Ability to convert between different communication protocols used in OT and IT environments, ensuring compatibility and enabling smooth data flow.

Data Mapping and Translation: The gateway should provide functionalities to map and translate data formats between different systems, ensuring that data is correctly interpreted across the OT/IT divide.

Standards Compliance: Adherence to industry standards, such as OPC UA, MQTT, AMQP, and others, to ensure broad compatibility and interoperability with various systems and equipment.

Scalability and Performance

As industrial operations grow and evolve, the OPC UA gateway must be able to scale accordingly:

Modular Architecture: Design that allows for easy expansion or upgrades as the number of connected devices and data volume increases.

High Throughput and Low Latency: Capable of handling high data volumes with minimal delay to meet real-time processing requirements of industrial applications.

Load Balancing: Ability to distribute data processing and communication loads efficiently to maintain performance and avoid bottlenecks.

Reliability and Redundancy

In critical industrial environments, the OPC UA gateway must operate reliably under all conditions:

Failover Mechanisms: Automatic switch-over to backup systems or channels in the event of a failure to ensure continuous operation.

Heartbeat and Health Monitoring: Regular checks to ensure the gateway and its connections are functioning correctly, with alerts for any anomalies or malfunctions.

Robust Design: Built to withstand harsh industrial environments, including resistance to dust, moisture, and extreme temperatures.

Data Management and Integration

Effective data management capabilities are essential for maximising the utility of the data exchanged through the gateway:

Data Aggregation and Filtering: Ability to aggregate data from multiple sources and filter out irrelevant or redundant information to optimise bandwidth and storage.

Contextualisation and Enrichment: Adding context to the data, such as time stamps, source information, and metadata, to enhance its value and usability in IT applications.

Historization and Archiving: Storing historical data for trend analysis, reporting, and compliance purposes, with efficient retrieval capabilities.

Usability and Maintenance

The gateway should be user-friendly and maintainable to ensure its long-term effectiveness:

Intuitive User Interface: Easy-to-use interface for configuring, monitoring, and managing the gateway, accessible to OT and IT personnel.

Comprehensive Documentation: Detailed user guides, technical documentation, and troubleshooting resources.

Support and Updates: Regular software updates and access to technical support to address emerging threats, compatibility issues, and operational requirements.

Compliance and Certification

Finally, the OPC UA gateway must meet relevant regulatory and industry standards:

Certification: Obtaining certifications from relevant bodies, such as the OPC Foundation, to validate compliance with OPC UA and other standards.

Regulatory Compliance: Adhering to regional and industry-specific regulations, such as GDPR for data privacy or ISA/IEC 62443 for industrial cybersecurity.

The OPC UA gateway can effectively bridge the gap between OT and IT systems by meeting these requirements and facilitating secure, reliable, and efficient data exchange. This integration enables advanced analytics, predictive maintenance, and other Industry 4.0 initiatives, ultimately driving operational excellence and business value.