Four security engineering simplifiers

We need to decide which security measures NOT to take

I have good news for you: There are two kinds of security decisions – and so far, I bet you only pay attention to one of them. Why is this good news? It means you have a big lever to improve your ICS security if only you begin paying attention to the second kind of decision – security by design decisions.

Where do you spend your security money?

To begin, please reflect for a moment: What are your biggest ICS security issues? Where will you spend the most time and resources this year? What tools and services will you buy?

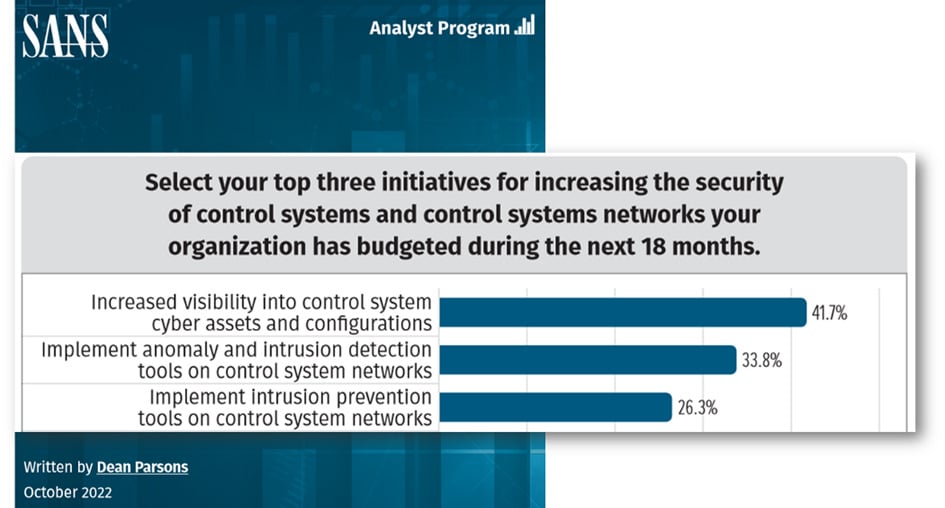

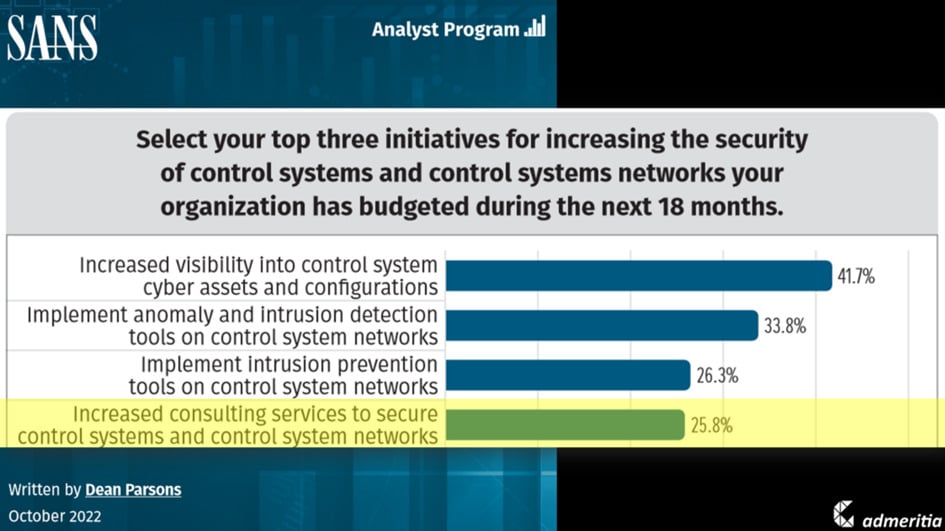

Unfortunately, I can’t hear your answers — but luckily, SANS took care of that some months ago. They took a survey asking that very question.

Let’s look at the Top 3 answers for a moment.

The Top 3 investments are in tools for asset and configuration visibility, anomaly and intrusion detection, and intrusion prevention.

What do these three have in common?

They’re all tools that helping you make better security decisions during the operations of your ICS: What do I have? Does it run normally? If not, what can I do? All three could be called “security operations” tools.

Security operations tools help us to defend our systems. Many other tools and services that you probably use fall in the “secure operations” category as well.

Yin and Yang of Security Decision-making

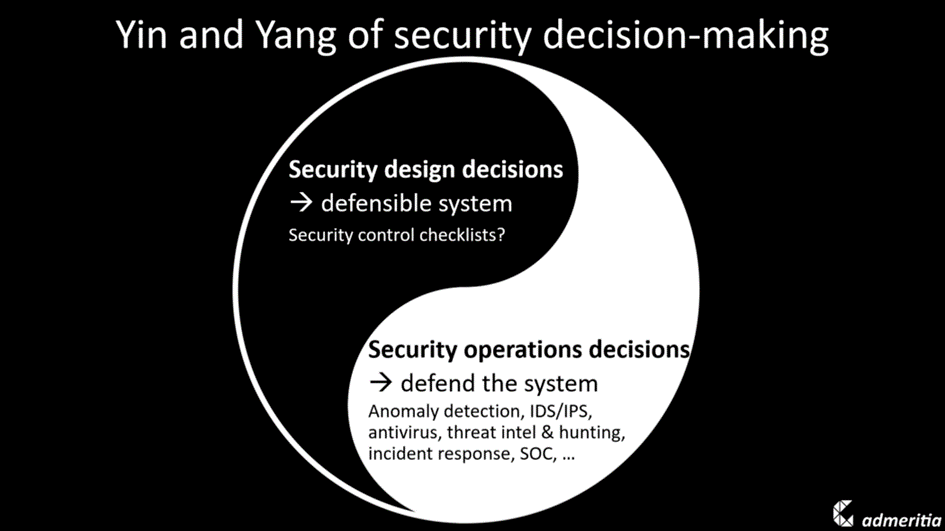

There’s nothing wrong with investing in secure operations. Making informed security operations decisions is absolutely essential. I would even call it one-half of the yin and yang of security decision-making.

But there’s another, neglected half: Security design decisions.

In security operations, you’re making decisions about defending your system. In security design, you’re making decisions about building a defensible system. You’re deciding which security measures you take.

And while we make highly informed, well-prepared, tool-supported security operations decisions even to the point of using AI: Can you think of a single tool that helps you making security design decisions?

All we have to support those security design decisions is… security control checklists…?!

Well, in an ideal world, checklists are great. In an ideal world, you’d just take every measure on one of these checklists. In reality, however, you have restrictions: Your budget it limited. Your time is limited. Not everything is technically feasible. You may need to comply with regulations.

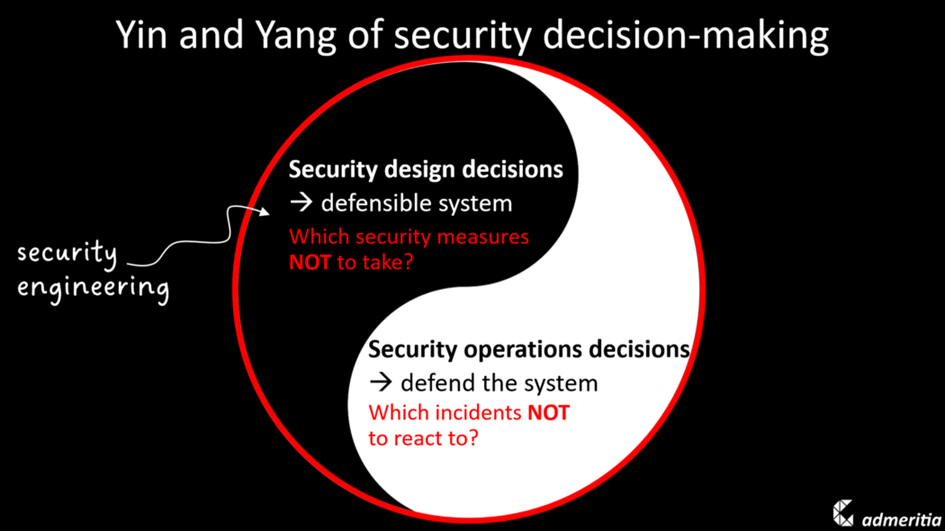

So in reality, the challenge is not to decide which security measures to take – you could use checklists for that – but to decide which security measures NOT to take.

Compare that to security operations, where operators tend to be overwhelmed by alerts: the real challenge is to filter out the incidents you do NOT have to react to. You can tell a good tool from a bad tool by how well it supports you with deciding what matters.

And the same is true for security design. That’s why you should not just tick off a checklist, but actually engineer your security design decisions. Security engineering helps you to make an informed decision which measures NOT to take.

That’s a massive unused potential right there! If you don’t do any security engineering, if you don’t make security design decisions consciously, you simply try to implement ALL the measures.

You should be investing into security engineering not although, but BECAUSE your security budget is limited.

Why don’t ICS engineers do security by design already?

We’re currently in a research project on security by design for ICS. We’ve asked our industry partners, INEOS (which is a global producer of chemicals) and HIMA (which is a manufacturer of control system components for functional safety) what holds them back from considering security during engineering.

The answers are simple, and probably true for your engineers as well:

ICS engineers have no time and no security expertise to do security engineering during design.

This is not likely to change. We won’t turn engineers into full-blown security experts anytime soon, and I’m not even sure we’d want that.

Instead, we need to define security engineering in a way that is doable for ICS engineers. That’s what we’ve been working on with INEOS and HIMA for the last two years, and it’s what I want to share with you now:

Four security engineering simplifiers that empower engineers without time and security expertise to make security design decisions that fit into their managers’ limited security budgets.

1st simplifier: Filters

The first simplifier is: Filters. If your time is limited, filtering out anything irrelevant is essential to make sure you spend your scarce security engineering time where it matters most.

Filter 1: Security Parameters

When we asked our research partners, INEOS and HIMA, what they wanted from us, this was the first thing they said:

Tell us where we need to pay attention. Tell us what matters.

To understand that need, you must understand that the ICS engineering process is utterly complex and interdisciplinary. ICS engineers like to complain that after all other domains have done their job, process engineering, electrical, and mechanical – ICS engineers are always the last ones to come in.

You could say that in the engineering of a plant, ICS engineers are always late to the party. And if ICS engineers are late, security engineers are super late. We come in even after the ICS engineers. And in the meantime, a huge haystack of engineering information has been created.

Out of that haystack of engineering information – what is security-relevant? It’s incredibly hard to put your finger on it.

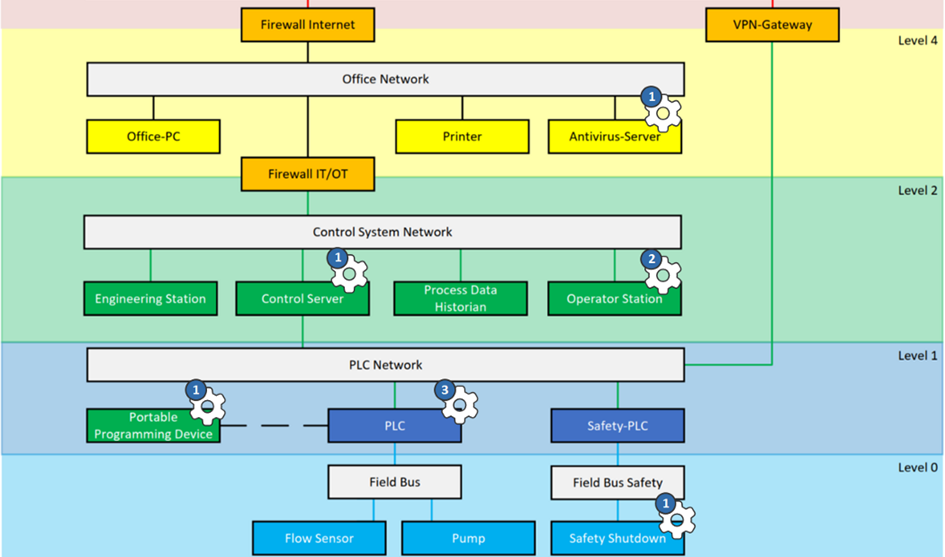

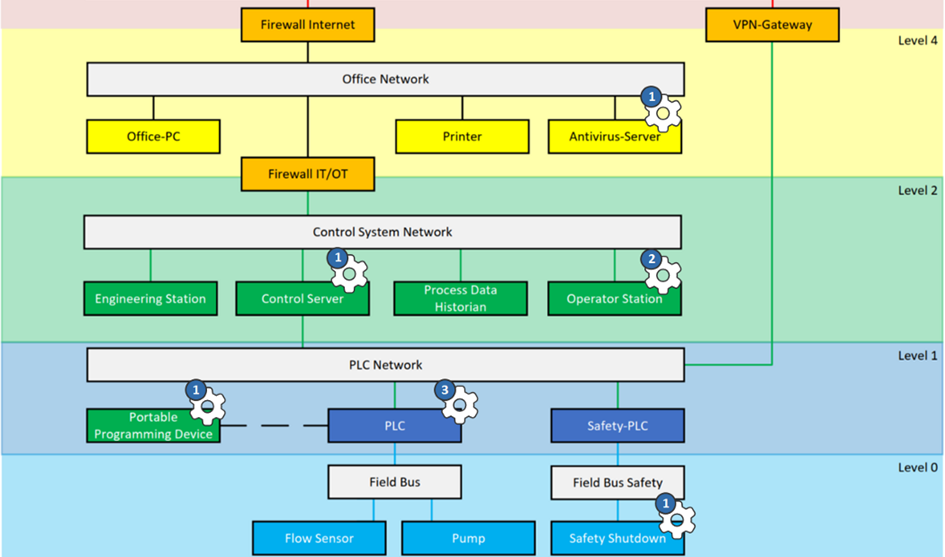

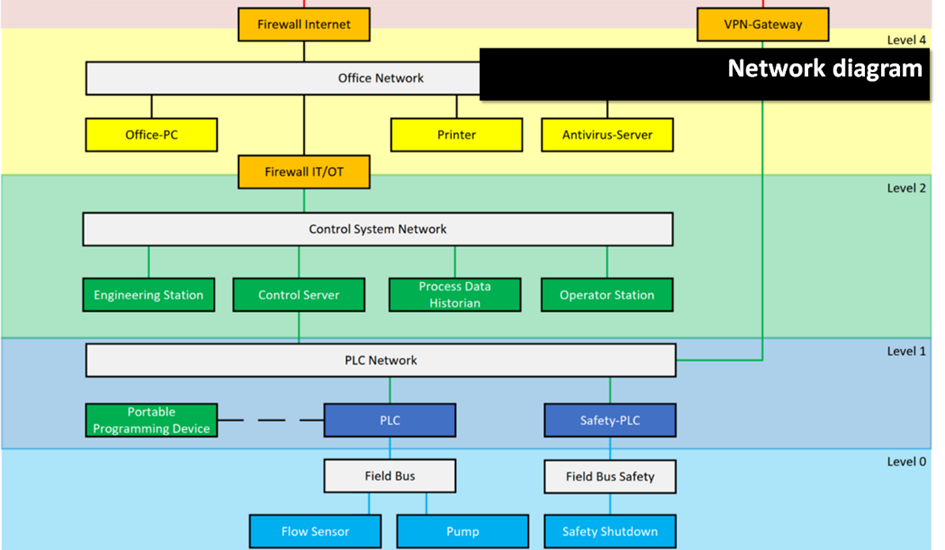

Let’s look at a simple example OT network. For security, in theory, everything could matter, because everything could be attacked or be used for an attack: A printer somewhere in a remote corner of your network. A tiny USB drive. Some configuration detail…

So we created a concept that makes security decisions visible. It’s called security parameters.

You can think of a security parameter as a little cogwheel in your big engineering information haystack that, if adjusted, impacts the security posture of your overall system.

Many security parameters are expectable, like authentication mechanisms.

Some are less obvious. For example, if a safety shutdown is built electronically or mechanically may not look like a security decision at first sight. But of course, it is – because mechanical shutdowns cannot be hacked remotely.

And then, there are heaps of “insecure by design features” in ICS. These are legitimate features that everyone builds into their systems without security in mind – but that does have an impact on security. For example, the fact that you can update your PLC logic during operations — this is a security decision, but it is mostly not made with security in mind. Thus, security parameters also make “insecure by design features” visible.

Security parameters are the cogwheels in your haystack of engineering information. They help you focus on the security design decisions that matter.

Filter 2: High-consequence events

The second filter you probably heard of before – maybe in INL’s CCE method – but you may not have thought of it as a filter: High consequence events (HCEs).

To identify high-consequence events, you need to answer the question what would make a really bad day in your plant. Many HCEs are safety-related, but not all.

I once was in a project at a coal surface mining company, and they said their worst nightmare would be if the coal excavator would fall over.

Coal excavator. Source: Martin Roell, CC BY-SA 2.5, https://commons.wikimedia.org/w/index.php?curid=841443

Now, coal excavators can have the size of the Eiffel tower, and they are, as one can imagine, custom-made. And of course, no one has a spare Eiffel tower in his garage. If the excavator falls over and breaks, it takes months to get a new one. That would be a prime high-consequence event, even if no one gets hurt.

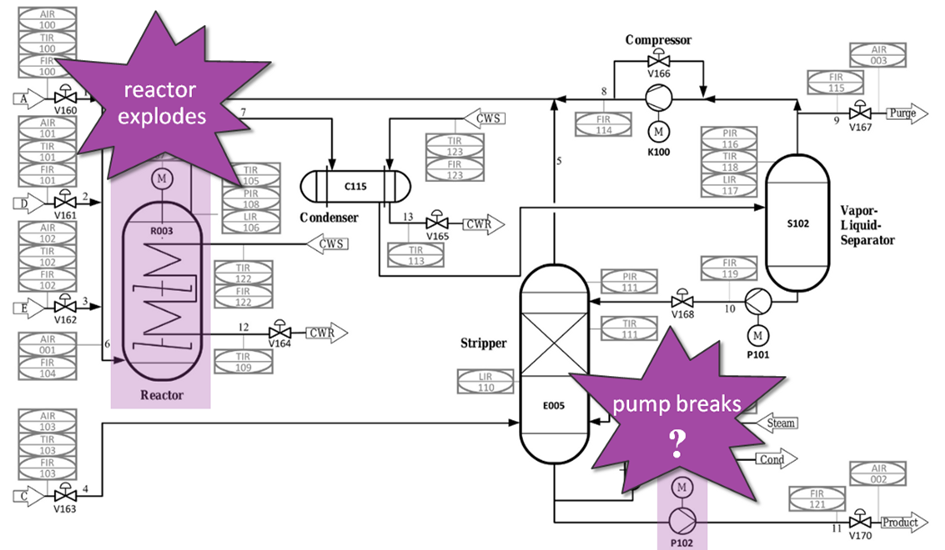

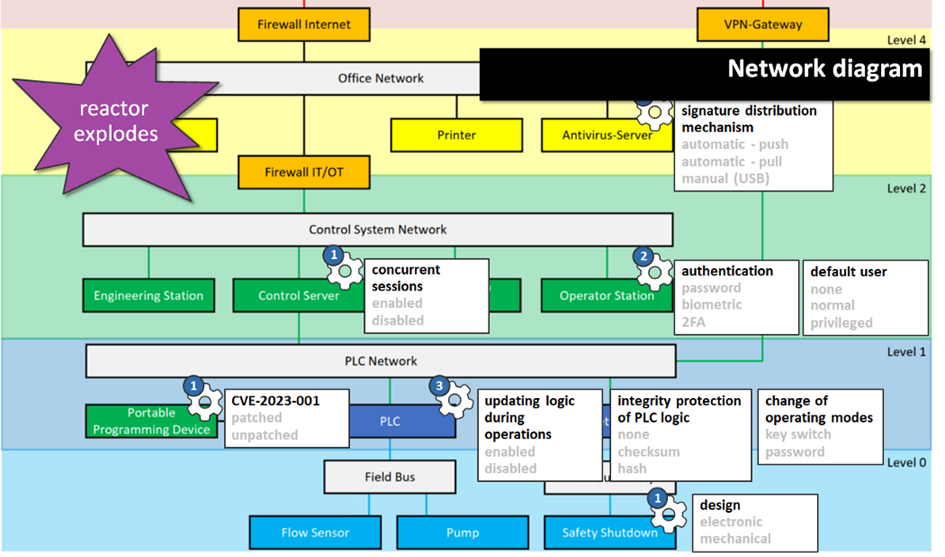

In our example, an explosion in the reactor could be a high-consequence event for you, but what’s important is that you get to decide which they are.

You probably only have about a dozen of really critical high consequence events, and it pays off to invest some time in deciding which they are. The final decision what your High Consequence Events are should be a management decision.

High Consequence Events are the “why” behind your security engineering. Think of them as your North stars that guide your engineers, keep them on track, making sure they spend their security engineering time on the risks that really matter.

Okay, so let’s try this out and put a North star into our example:

Can you already see more clearly how you should decide about each security parameter?

…

You don’t?

Me neither. Because there’s still a large gap between the high-level high consequence events and the detailed, low-level security parameters. To bridge this gap, we need our third filter.

Filter 3: Functions

Functions are magical. Instead of looking at a big pile of technology, you’re looking at why that pile of technology exists. Functions add humans, data flows, and a purpose.

Alright, let’s do some magic:

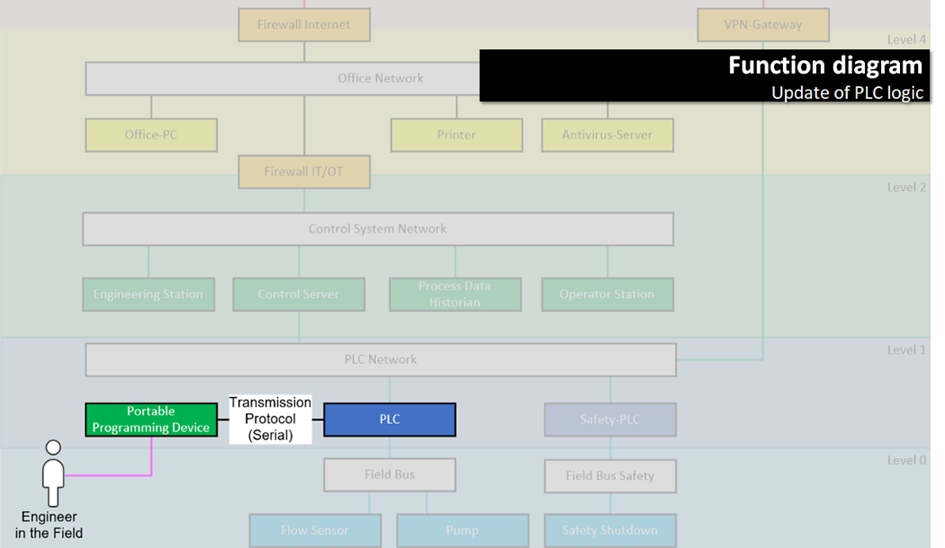

Here’s the pile of technology…

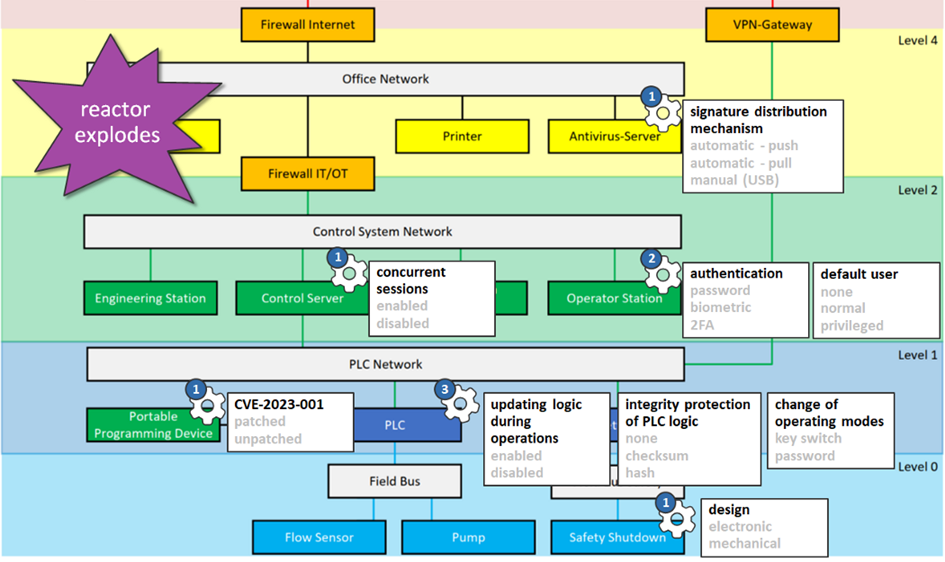

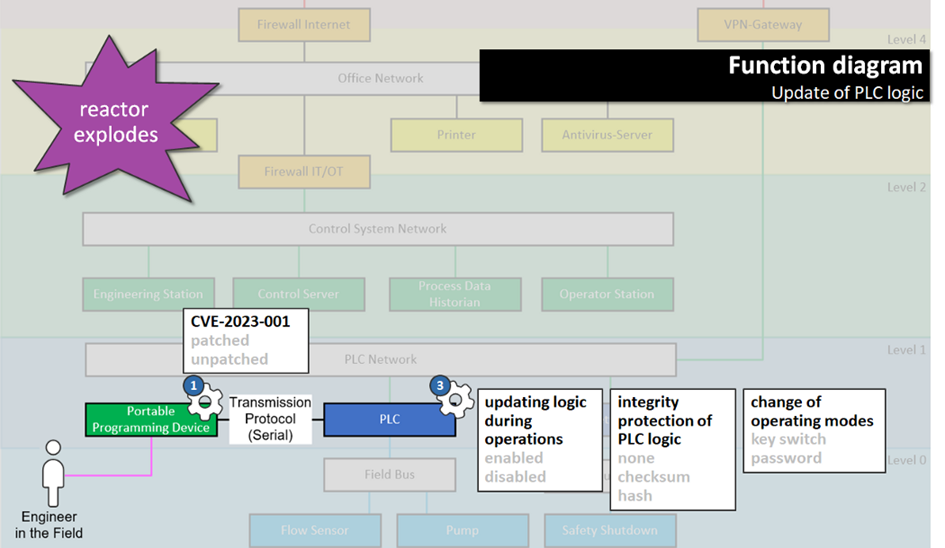

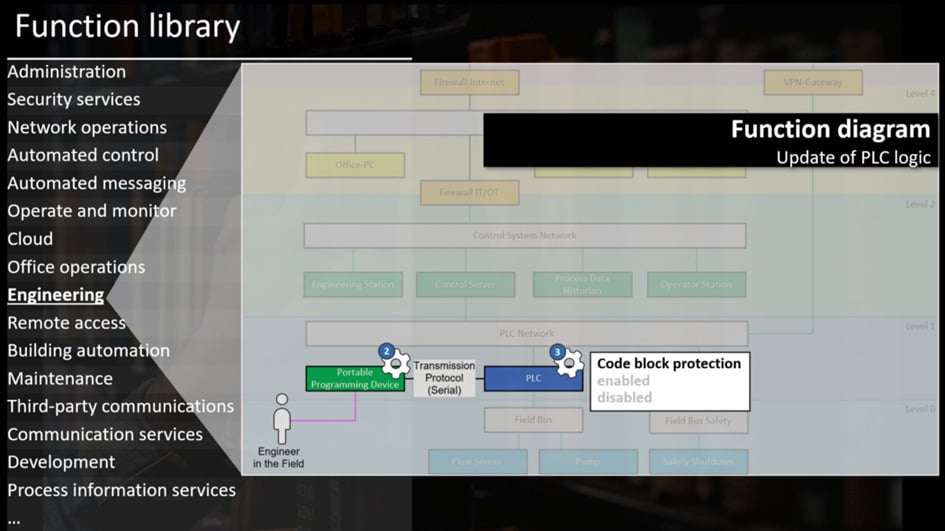

… – and here’s the function “update of PLC logic”.

In contrast to the OT network diagram with all the colorful boxes, functions are accessible even to people that are not knee-deep in the technical details. Anyone can understand the function diagram. Anyone can understand that one thing your engineers are doing with the colorful boxes is updating the PLC logic, and how that works, and who does it.

Now we add the security parameters and your North star, the high consequence event, again. Instead of looking at this overwhelming picture…

….you’re now looking at this:

If you go through your systems HCE by HCE and function by function, your security design question boils down to “which security parameters do I need to adjust to make sure this function can’t lead to this HCE?

To recap the first security engineering simplifier, here are the three filters at a glance:

- security parameters help you to focus on the decisions that matter,

- high consequence events help focus on the risks that matter, and

- functions help focus on the systems that matter.

Applying these filters looks easy when I show pretty slides, but of course I’ve cheated. On my slides, all the cogwheels, explosions, and function drawings are already there. Your engineers didn’t have to invest any time to create them.

But your engineers, as you recall, neither have the time nor the security expertise for making up functions and security parameters.

That’s where out next security engineering simplifier comes in:

2nd simplifier: Libraries

Using libraries means your engineers don’t have to create the functions and security parameters. They just have to choose them.

We’re creating libraries in our research. Engineers can browse categories and see what they pick from different categories like “automated control” or “Engineering” – here’s the example function we’ve been using before.

And then of course, each function in the library has its security parameters directly attached, so your engineers don’t have to make them up either.

Are you an ICS manufacturer? Because this is where you should sit up and take notes.

Maybe you’ve been in deadlock situations like these:

The manufacturer asks the asset owner “what do you need from our components regarding security?” and the asset owner replies “I don’t know, what are the security options for your components?”

Or even worse: You come to a legacy plant where the asset owner uses your components, but you find that the they haven’t used all the beautiful security features you’ve built in.

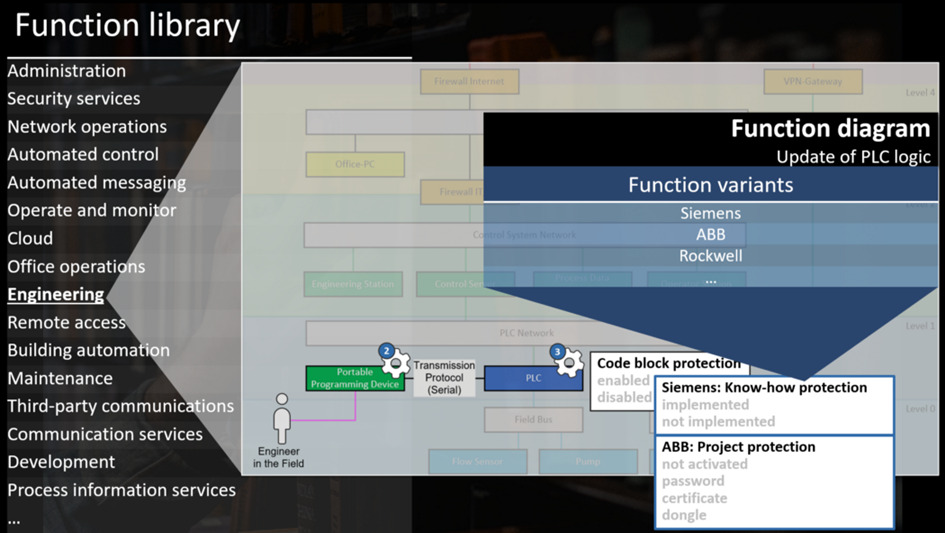

Well, for situations like these, function and security parameter libraries are your chance. If you provide your own vendor-specific function and security parameter libraries, of course the function “update of PLC logic” will look different for e.g. ABB or Siemens, and the security parameters will be different as well:

With a vendor-specific library and recommendations for setting security parameters, you can effectively communicate your security philosophy to your asset owners. “Look”, you can say “this is how you should be updating PLC logic in our system. And by the way, we recommend choosing the project protection option ‘certificate’ for protecting that logic”.

Soon, dear manufacturers, you will need to do document something like this anyway, with regulation like the European Cyber Resilience Act lurking around the corner, making security part of conformity labels.

We’re still looking for research partners who want to try out building function and security parameters libraries for their components. If you’re interested, let me know.

If you’re an asset owner: These libraries are also excellent governance tools. If manufacturers can use them to communicate their security philosophy, so can you. You can define functions, say for secure remote access, along with security parameters and their required values, clearly communicating “this is how, at our plant, we’re handling remote access.”

To recap, libraries are an important building block in simplifying security engineering if you have no time, no security expertise, and limited budget, because they help encapsulate security knowledge in a way that is reusable.

The filters and libraries mainly help identifying the security decisions that matter. Now, our third simplifier helps when it comes to making and documenting them:

3rd Simplifier: Diagrams

Engineers are no security experts. Managers are no engineering experts. And nothing communicates complex information as effectively to non-experts as a diagram.

You’ve seen a proof before: The function diagram. Even if you’re not knee-deep in ICS engineering, you were able to quickly understand how that pile of technology roughly works based on an exemplary function diagram. So if you want your engineers to communicate their security decisions precisely, request diagrams.

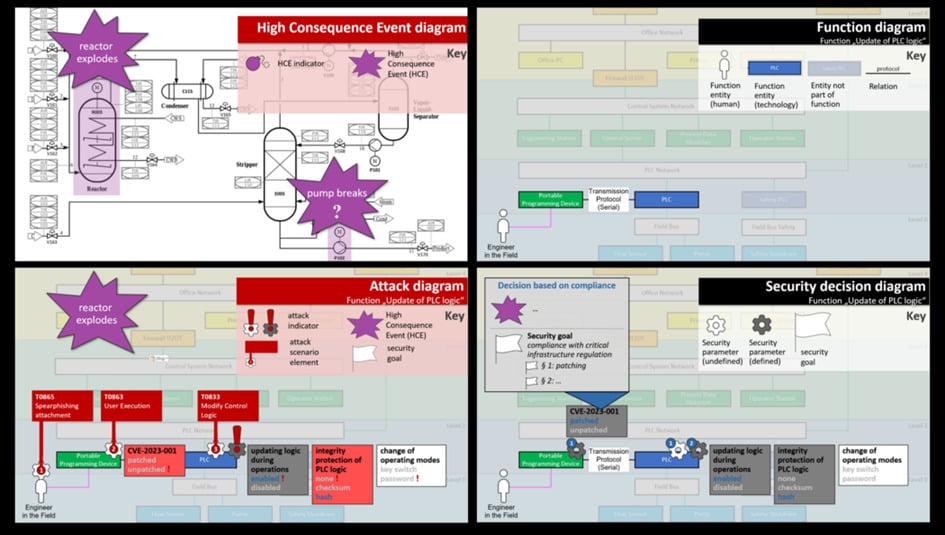

We’ve drafted four diagram types. Each diagram supports a specific step in security design decision-making, and shows only the information relevant to that purpose:

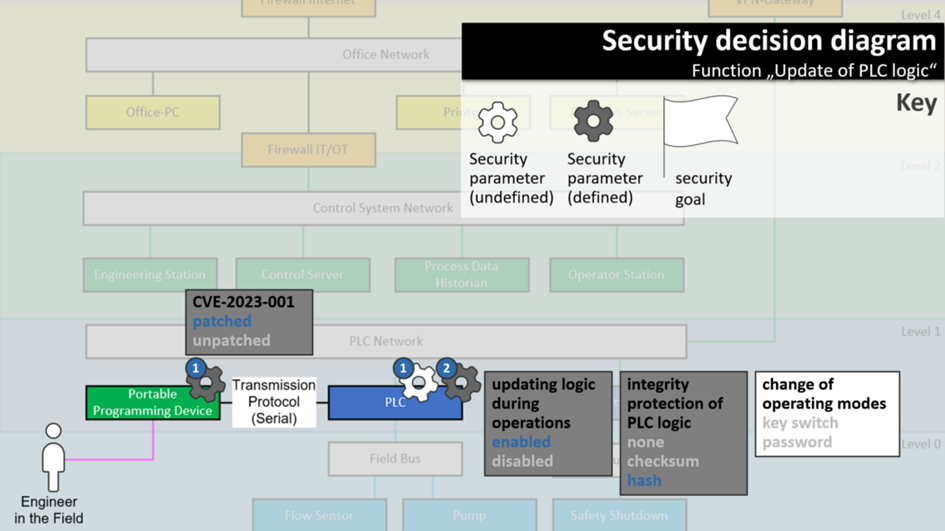

The two on the top half you already know: The high consequence event diagram and the function diagram. Now, let’s take a deeper look at the third diagram, called the security decision diagram. It helps your engineers to actually make security decisions, but also to document and communicate these decisions in a way so they can be traced back.

Here is an example to illustrate: We see four security parameters. For three of them (which are grey), let’s assume the decisions have been made, and we want to know why.

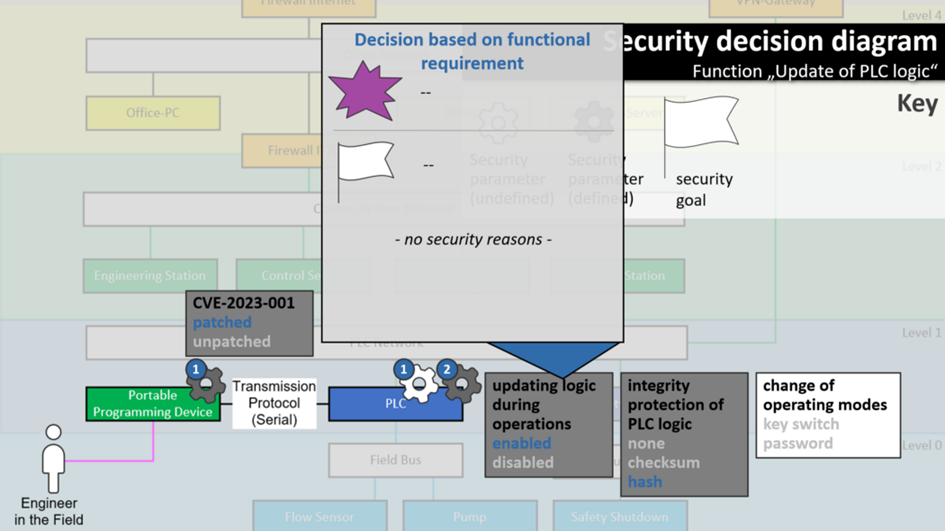

Example 1: Decision-based on a functional requirement

To begin with, updating logic during operations has been enabled. You wonder why, because this sounds detrimental to security? Let’s see:

It may in fact be detrimental to security, but security had no say in this. It was a decision made based on a functional requirement, not for security reasons. Nevertheless, it is a decision that impacts security and should be documented as such.

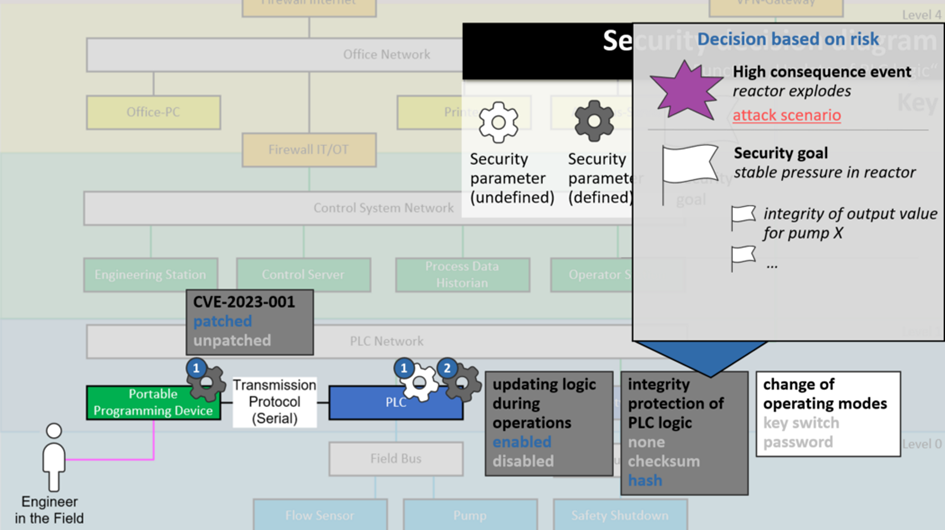

Example 2: Decision-based on risk

Next, is the integrity decision. Integrity protection of PLC logic using hashes? This sounds like a hassle to implement if it’s at all technically feasible.

Clicking on it, you can see it was actually a risk-based decision, and it was tied to a high-consequence event you deemed important: the reactor explosion.

You could even click on the red link and switch to an attack diagram to see how exactly that security parameter could contribute to causing a reactor explosion:

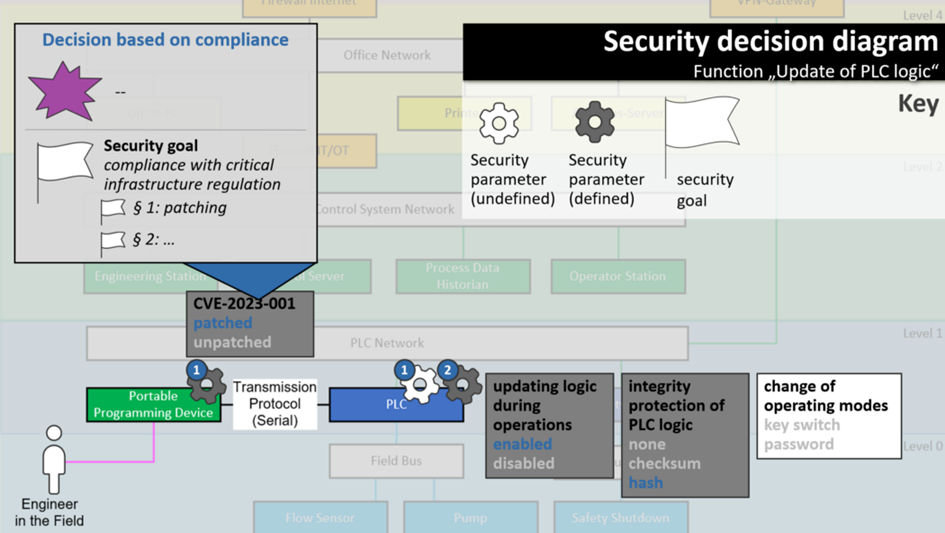

Example 3: Decision-based on compliance

And third, the decision to patch this particular (fictional) vulnerability in the PLC programming device. Sigh, patching in ICS… is there really a risk that justifies that?

As you can see, there’s no specific risk, but it doesn’t matter. Because unfortunately, critical infrastructure regulation requires you to patch anyway. This was a compliance-based decision.

Decision traceability matters!

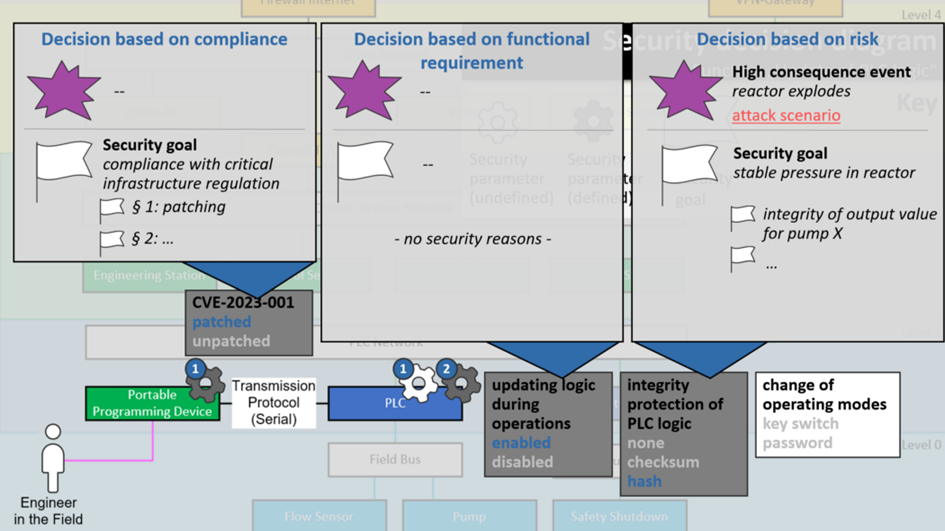

If you look at all three example decisions next to each other, two things become clear.

First, the importance of decision traceability.

Traceability is important not only for security audits, but also during operations: If you change something, you want to know if it affects security, and why.

And most importantly, remember security engineering is all about making informed decisions on saying NO to certain security measures. Saying no to measures doesn’t work without traceability. Especially when deciding NOT to take a certain security measure, you want to know why you do.

What also becomes clear from this comparison is that there are lots of perfectly valid reasons to make security design decisions. As much as we in our security bubble would like to believe that – not all security decisions are risk-based, and they don’t have to be.

This is why a risk assessment does not work as the only means to make your security design decisions. I’d even say you shouldn’t focus on making sure all your decisions are risk-based, but on making sure all of them are traceable.

Quick recap again: Diagrams guide your engineers

- to make their security design decisions

- to efficiently communicate them, and

- to ensure the decisions are traceable at all times.

In other words: If you want security decisions made, documented, and communicated with precision, request diagrams from your engineers.

Now, if you do request diagrams, you’re likely to get “we don’t have time for pretty pictures” as an answer. And they’re right – they don’t. I haven’t drawn the diagrams you’re seeing by hand, and neither should you.

4th Simplifier: Tool support

It’s the 21st century.

It wouldn’t occur to you to expect your SOC operator to make security operations decisions without any tool support, so why would you expect that from your security engineers making security design decisions?

Also, in all other engineering domains, we’re looking into creating machine-readable versions of engineering data and diagrams, and there’s no reason why security engineering should lag behind.

I’m not saying you should be using a tool just because. But here are three good reasons to consider tool support:

- Single source of truth. You want to have a single source of truth for all your security engineering information, your high-consequence events, functions, security parameters, and traceable decisions, and the diagrams should simply be ways to view this information.

- Digital exchange. You want to be able to exchange security engineering information. You want to exchange function and security parameter libraries with your manufacturers, for example. You have all these handy libraries — you don’t want to spemd ages to copy their information into a format you can use. You may also want to be able to integrate security engineering information into your digital twins. All this requires your security engineering information to be accessible in a digital, ideally standardized, format.

- Guidance. And last, don’t underestimate the workflow guidance that an electronic tool can provide, especially for ICS engineers whom you cannot expect to be familiar with security engineering workflows.

Here’s a summary of all four security engineering simplifiers:

To wrap things up, let’s go back to the SANS survey for a second and reveal the fourth item on the list, marked yellow:

Look at that! Some security design decisions at last: Securing your ICS. But — by hiring consultants.

Phew. I feel qualified to say that because I myself am a consultant: Think twice about that one. Someone who doesn’t know your filters — your high consequence events, functions, and security parameters — can quite likely only come up with a security controls checklist. And following any of these checklists without any filter is, as you know by now, such a waste of time and money.

Anyone with a checklist can tell you which security measures you should take.

Only you can decide which ones you don’t take.

So instead of investing in people telling you which security measures you should be taking, invest into making an informed decision which security measures NOT to take.

It’s entirely doable.

Find your filters,

leverage libraries,

draw diagrams,

and try some tool support.

Encourage and empower your engineers to make these decisions, to do some security engineering.

I sincerely hope that if you read this article a couple of years from now, this sounds like a strange thing to say. Because at that point, professionalizing your security design decisions, just as professionalizing your security operations decisions, will have become a total no-brainer.

So I hope when you next review your ICS security priorities, you sneak some serious security engineering in.

This is the transcript of a presentation held at S4x23 in Miami South Beach on Feb 14, 2023. A YouTube video of the original presentation will be published on S4’s YouTube channel.

This work was created as part of the research project IDEAS, funded by the German Federal Ministry of Education and Research (BMBF). A list of all IDEAS publications can be found here.